New functionality for swingbench…

A small but significant set of changes Last weekend I updated swingbench to add a few features that I’ve had frequent requests for. One is the addition of da...

Hybrid Partitioned tables continues to be one of my favorite features of Oracle Database 19c and they’ve gotten better over time as we’ve introduced new features. One feature that I really like about them is the ability to exchange external tables with partitions internal to the database. The exchanged partition now points at whatever the external table was managing. This could be a CSV on a cooked file system or a Parquet file on object storage. This approach enables you to move cold or read-only data onto lower-cost storage, reducing costs and management overhead. The external partitions can reside on-premises or in the cloud. It’s a fantastic feature.

Where it becomes really useful is when data has some temporal dimension (orders, trades, telephone calls etc.). This type of data typically has a period where it’s ingested and potentially updated and then frequently read. It’s then typically only accessed for reporting. And as time goes on even this happens less and less frequently. In many instances, it’s only held in the database for regulatory requirements. As a result, it becomes an expensive burden to manage and provides little value to the business. Oracle Database provides a number of features to make this simpler to manage such as

And many many more. Check out the documentation here

But the functionality I wanted to discuss today is Hybrid Partitioning in the cloud using object storage. And more specifically Oracle Autonomous Database. Because it’s a little different because of the nature of the platform and some of the necessary restrictions it places on you.

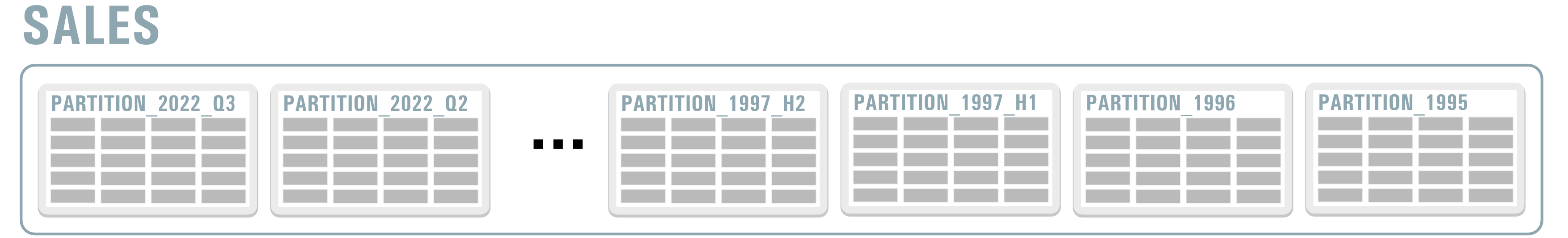

To highlight how simple it is to leverage Hybrid Partitioned Tables on Oracle Autonomous Database I’m going to use a dataset that’s generated for my swingbench Sales History benchmark. Specifically, we’ll be looking at the SALES table. Its range partitioned on the TIME_ID column or type DATE. To start with the data is partitioned by quarter, as it gets older the quarters are periodically merged into bi-annual partitions and then yearly partitions. In Oracle Database all of these operations can be done online with the potential to compress and sort them and even make them read-only (to save having to constantly back them up). A simple diagram is shown below.

The code used in this example can be found in this gist

In the following example we’ll make a copy of the SALES table as a hybrid partitioned table as we need to start with something. As I mentioned I’ll be doing this on Oracle Autonomous Database so before we do anything we’ll need to create some credentials to allow us to access object storage. There’s a lot of documentation on creating credentials and I’ll be using an authentication token to do so. And before you comment, this is not my username and this is not a valid authentication token.

begin

dbms_cloud.create_credential(

credential_name => 'obj_store_cred2',

username => 'domgiles',

password => 'HSKDKSDJJADA!JD'

);

end;

NOTE : I’m doing this in a schema called SALES and I’ve granted execute on DBMS_CLOUD to the SALES user.

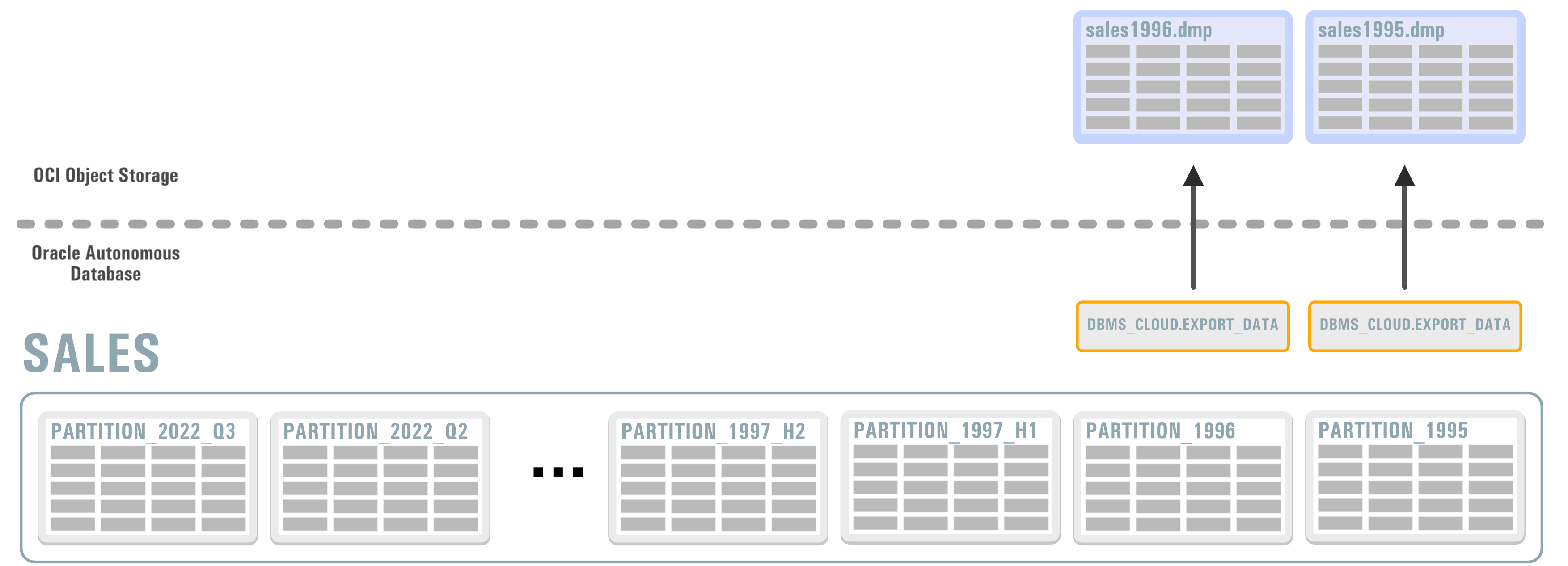

Now that we can access buckets in our object storage let’s move the data we want to stage on object storage. To do this we use the DBMS_CLOUD.EXPORT_DATA command and move it into a bucket we’ve previously created. The reason we are doing this is that currently the EXCHANGE operation won’t automatically move it for us.

BEGIN

DBMS_CLOUD.EXPORT_DATA(

credential_name =>'obj_store_cred2',

file_uri_list =>'https://objectstorage.uk-london-1.oraclecloud.com/n/oradbclouducm/b/ext-table/o/sales1995.dmp',

format => json_object('type' value 'datapump'),

query => 'SELECT * FROM sales partition(sales_1995)'

);

END;

BEGIN

DBMS_CLOUD.EXPORT_DATA(

credential_name =>'obj_store_cred2',

file_uri_list =>'https://objectstorage.uk-london-1.oraclecloud.com/n/oradbclouducm/b/ext-table/o/sales1996.dmp',

format => json_object('type' value 'datapump'),

query => 'SELECT * FROM sales partition(sales_1996)'

);

END;

Here I’ve copied/exported two partitions of data. My two oldest. Normally this would have been from the source table but for now, just imagine that this was from the table we are about to create. I’ve exported them into Oracle Datapump format.

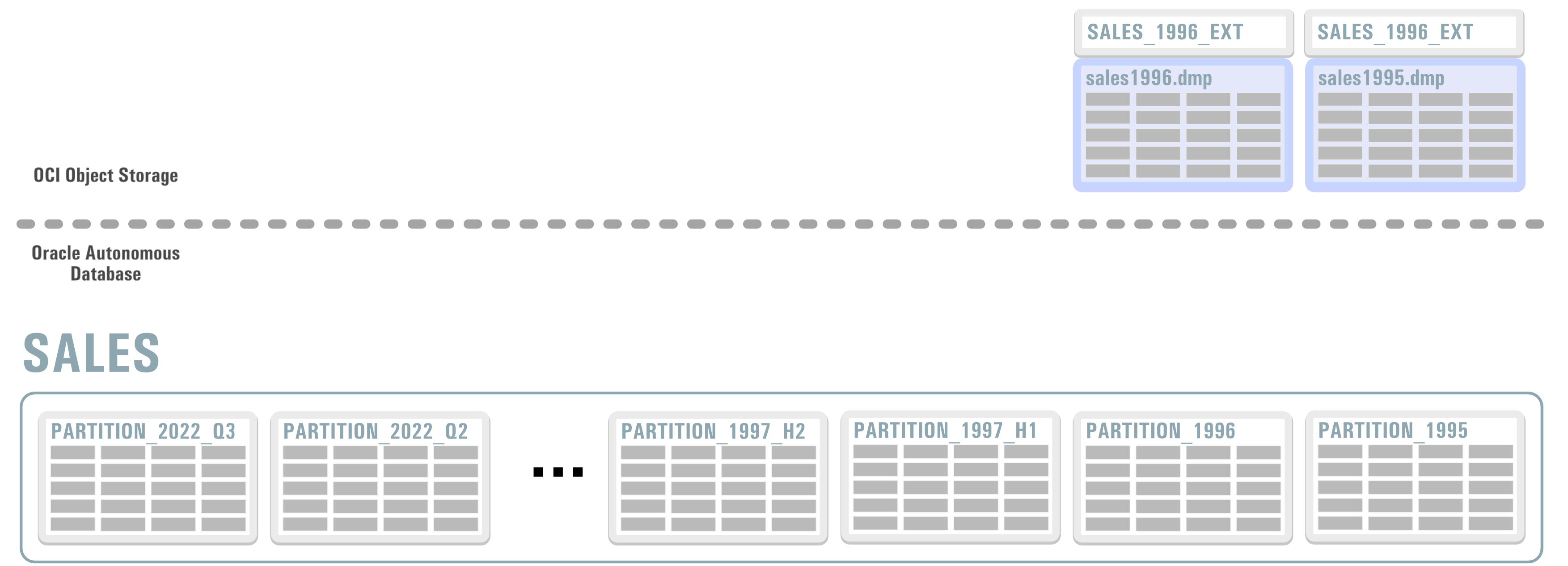

Now that we’ve copied the data from the database onto my object storage I next need to create external tables that map to the datapump files held on object storage. Instead of using DDL as we would if were doing this on-premises the approach to creating the table is a little different. We need to use the PL/SQL package DBMS_CLOUD the same one we used to create our credentials and export our data.

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name =>'SALES_1995_EXT',

credential_name =>'obj_store_cred2',

file_uri_list =>'https://objectstorage.uk-london-1.oraclecloud.com/n/oradbclouducm/b/ext-table/o/sales1995.dmp',

format => json_object('type' value 'datapump'),

column_list => 'PROD_ID NUMBER NOT NULL ENABLE, CUST_ID NUMBER NOT NULL ENABLE, TIME_ID DATE NOT NULL ENABLE, CHANNEL_ID NUMBER NOT NULL ENABLE, PROMO_ID NUMBER NOT NULL ENABLE, QUANTITY_SOLD NUMBER(10,2) NOT NULL ENABLE, SELLER NUMBER(6,0) NOT NULL ENABLE, FULFILLMENT_CENTER NUMBER(6,0) NOT NULL ENABLE, COURIER_ORG NUMBER(6,0) NOT NULL ENABLE, TAX_COUNTRY VARCHAR2(3) COLLATE USING_NLS_COMP NOT NULL ENABLE, TAX_REGION VARCHAR2(3) COLLATE USING_NLS_COMP, AMOUNT_SOLD NUMBER(10,2) NOT NULL ENABLE'

);

END;

BEGIN

DBMS_CLOUD.CREATE_EXTERNAL_TABLE(

table_name =>'SALES_1996_EXT',

credential_name =>'obj_store_cred2',

file_uri_list =>'https://objectstorage.uk-london-1.oraclecloud.com/n/oradbclouducm/b/ext-table/o/sales1996.dmp',

format => json_object('type' value 'datapump'),

column_list => 'PROD_ID NUMBER NOT NULL ENABLE, CUST_ID NUMBER NOT NULL ENABLE, TIME_ID DATE NOT NULL ENABLE, CHANNEL_ID NUMBER NOT NULL ENABLE, PROMO_ID NUMBER NOT NULL ENABLE, QUANTITY_SOLD NUMBER(10,2) NOT NULL ENABLE, SELLER NUMBER(6,0) NOT NULL ENABLE, FULFILLMENT_CENTER NUMBER(6,0) NOT NULL ENABLE, COURIER_ORG NUMBER(6,0) NOT NULL ENABLE, TAX_COUNTRY VARCHAR2(3) COLLATE USING_NLS_COMP NOT NULL ENABLE, TAX_REGION VARCHAR2(3) COLLATE USING_NLS_COMP, AMOUNT_SOLD NUMBER(10,2) NOT NULL ENABLE'

);

END;

This creates two external tables SALES_1995_EXT and SALES_1996_EXT.

The next step is to create a hybrid partitioned table. Outside of this example, it would have been the first thing that had been created and populated over time.

NOTE I haven’t included all of the partitions that would normally be created simply to make the example more manageable. I’ve also mapped one of the external dmp files as the source for an external partition. As it stands today you must have at least one external partition when using this call to create a hybrid partitioned table. This isn’t a requirement of the DDL and I’ve raised a bug to get this looked at.

BEGIN

DBMS_CLOUD.CREATE_HYBRID_PART_TABLE(

table_name =>'SALES_HYBRID',

credential_name =>'obj_store_cred2',

format => json_object('type' value 'datapump'),

column_list => 'PROD_ID NUMBER NOT NULL ENABLE, CUST_ID NUMBER NOT NULL ENABLE, TIME_ID DATE NOT NULL ENABLE, CHANNEL_ID NUMBER NOT NULL ENABLE, PROMO_ID NUMBER NOT NULL ENABLE, QUANTITY_SOLD NUMBER(10,2) NOT NULL ENABLE, SELLER NUMBER(6,0) NOT NULL ENABLE, FULFILLMENT_CENTER NUMBER(6,0) NOT NULL ENABLE, COURIER_ORG NUMBER(6,0) NOT NULL ENABLE, TAX_COUNTRY VARCHAR2(3) COLLATE USING_NLS_COMP NOT NULL ENABLE, TAX_REGION VARCHAR2(3) COLLATE USING_NLS_COMP, AMOUNT_SOLD NUMBER(10,2) NOT NULL ENABLE',

partitioning_clause => 'PARTITION BY RANGE (TIME_ID)

(PARTITION SALES_1995 VALUES LESS THAN (TO_DATE(''1996-01-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN''))

external location (''https://objectstorage.uk-london-1.oraclecloud.com/n/oradbclouducm/b/ext-table/o/sales1995.dmp''),

PARTITION SALES_1996 VALUES LESS THAN (TO_DATE(''1997-01-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_H1_1997 VALUES LESS THAN (TO_DATE('' 1997-07-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_H2_1997 VALUES LESS THAN (TO_DATE('' 1998-01-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_Q1_1998 VALUES LESS THAN (TO_DATE('' 1998-04-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_Q2_1998 VALUES LESS THAN (TO_DATE('' 1998-07-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_Q3_1998 VALUES LESS THAN (TO_DATE('' 1998-10-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_Q4_1998 VALUES LESS THAN (TO_DATE('' 1999-01-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN'')),

PARTITION SALES_Q1_1999 VALUES LESS THAN (TO_DATE('' 1999-04-01 00:00:00'', ''SYYYY-MM-DD HH24:MI:SS'', ''NLS_CALENDAR=GREGORIAN''))

)'

);

END;

As it stands now this external table only has data for 1995 in it.

SALES@domsatp 🍷 > select count(1) from sales_hybrid;

COUNT(1)

----------

26064

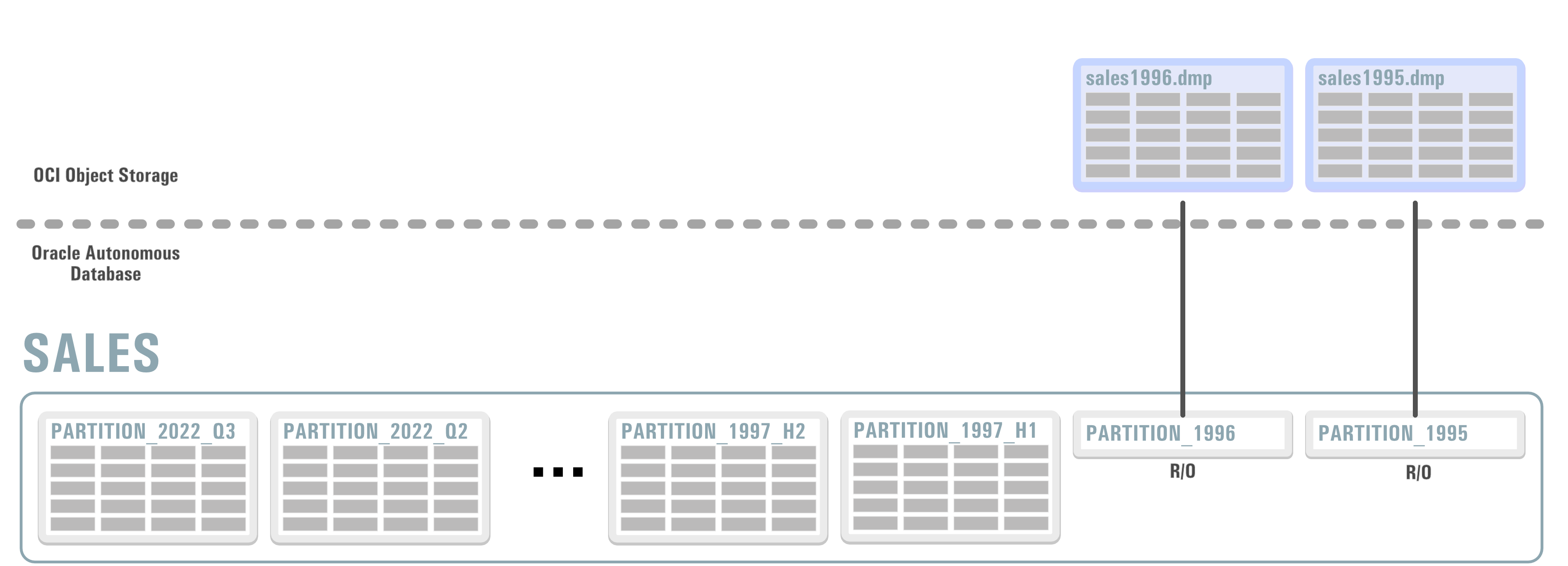

Now we are in a position to swap the external table SALES_1996_EXT with the internal parition SALES_1996. To do so we just use the command

alter table sales_hybrid exchange partition SALES_1996 with table SALES_1996_EXT;

This results in the following

We can see this in the USER_TAB_PARTITONS

SALES@domsatp 🍷 > select TABLESPACE_NAME, READ_ONLY from USER_TAB_PARTITIONS where table_name = 'SALES_HYBRID' and PARTITION_NAME = 'SALES_1996';

TABLESPACE_NAME READ

------------------------------ ----

SYSTEM YES

NOTE : There isn’t a column that specifically shows a partition as being external but the fact it’s listed as being the SYSTEM tablespace

And querying the SALES_HYBRID tables now shows an increased number of rows as a result of the data in the external partitions.

SALES@domsatp 🍷 > select count(1) from sales_hybrid;

COUNT(1)

----------

55995

And that’s it… Hybrid Partitioned Tables. A really powerful feature that makes it simple to take advantage of cost effective object storage whilst reducing the overhead of managing large tables.

A small but significant set of changes Last weekend I updated swingbench to add a few features that I’ve had frequent requests for. One is the addition of da...

A new build of Swingbench with a whole bunch of small changes mainly related to the benchmarks. Faster build time for the JSON benchmark when creating lar...

In my previous post “Going Big” I discussed the difference testing a data set of a representative size can make to the usefulness of a benchmark. Running a w...

Swingbench makes it simple to generate a dataset that you can simulate transactions against. However one of the problems I commonly see is that the users of ...

To celebrate the new release of “Oracle Database Free 23c : Developer Release” I’m releasing a new build of swingbench. This build includes a new “Movie Stre...

Swingbench 2.7 So I finally found some time to get a new build of swingbench done. The big change is that swingbench now only supports JDK-17 and above. Now,...

Hybrid Partitioned tables continues to be one of my favorite features of Oracle Database 19c and they’ve gotten better over time as we’ve introduced new feat...

Following quickly on the heels of the update to MonitorDB I’m releasing a new build of DBTimeMonitor. This is a simple update using the latest Oracle jdbc dr...

I’ve just updated MonitorDB. It’s never going to replace Grafana but if you need a quick solution to monitor a few values inside of the Oracle Database and y...

This short blog isn’t about best practices when conducting benchmarks. I think there are plenty of formal papers on the subject available with just a quick G...

I’ll make a confession. I don’t own a Microsoft Windows Machine. I never have and probably never will. This means that I generally never get the oppertunity ...

A New Release of Swingbench… Along with a new website, I’m rolling out a new build of Swingbench. This release is a little embarrassing for me, as it include...

A New Start… Quite a lot has changed since I last posted on this website. I had just left Oracle to join Google… A year and a half later I returned to Oracle...